A multi-tenant SaaS application is a Software as a Service (SaaS) application that allows multiple customers (tenants) to share a single software instance and infrastructure while keeping their data and configurations isolated. This approach allows for efficient resource management and simplified maintenance, enabling rapid deployment of updates and new features. Leveraging a multi-tenant architecture enables businesses to lower costs and increase the scalability, making it a smart choice for service delivery organizations to meet their customer’s diverse needs.

Part1 of this article touch up on following topics:

Multi-tenant architectural models

Considerations for choosing a multi-tenant model

Reference Architecture using AWS Services

Multi-Tenant Architectural Models

Multi-tenant SaaS application can be built on different architectural models.

1. Pool Model (Shared)

In shared model, all tenants consume the same underlying infrastructure or services. This optimizes the resource utilization and cost.

Pros

- Cost Efficiency: Lower operational costs due to shared resources.

- Simplified Management: Single codebase and database instance reduce maintenance efforts.

- Scalability: Easier to scale horizontally by adding resources without significant architectural changes.

- Data Isolation Risks: Higher risk of accidental data leakage if proper access controls are not implemented.

- Performance Bottlenecks: Increased load can affect performance of other tenants. (eg: noise from one tenant impacting the other tenants.)

- Limited Customization: Tenants have limited ability to customize their environments

- Cost Tracking: Attribution of tenant wise cost tracking is difficult as the tenants are using the same underlying infrastructure.

2. Silo Model (Dedicated)

In this model, each tenant has their own application and infrastructure which provides enhanced controls and customization to their specific needs. This model is suitable for organizations with stringent regulatory compliance requirements because it ensures tenant data is completely isolated.

Pros

- Maximum Data Isolation: Each tenant’s data and applications are fully isolated, enhancing security and compliance.

- Performance Control: Dedicated resources can be optimized for specific tenant needs, ensuring predictable performance.

- Easier Compliance: Simplifies compliance with regulations that require strict data isolation.

- Cost Tracking: Since this model uses a dedicated environment, it provides a simple way to capture and associate infrastructure costs with each tenant.

- Limited blast radius: Since each tenant is running in its own environment, any failure occurs within a given tenant may not cascade to the other tenants.

Cons

- Higher Costs: More expensive due to the need for separate infrastructure for each tenant.

- Management Overhead: Increased operational complexity in managing multiple environments and deployments.

- Resource Underutilization: Risk of over-provisioning resources, leading to wasted capacity.

3. Bridge Model (Hybrid)

The bridge model is more of a hybrid model which enables to apply silo or pool model based on different customer needs. This approach allows the flexibility to adapt a balanced solution for cost efficiency and isolation requirements.

Pros

- Flexibility: Allows for a mix of shared and dedicated resources based on tenant needs.

- Targeted Resource Allocation: Can optimize performance for critical tenants while sharing resources with others.

- Partial Isolation: Sensitive data can be isolated while less sensitive data remains shared.

Cons

- Increased Complexity: More complicated to manage due to the hybrid nature of the architecture.

- Cost Tracking: Requires careful tracking of resource usage.

- Integration Overhead: May need additional integration points between shared and dedicated components.

Considerations for Choosing a Multi-Tenant Model

Choosing the right multi-tenant model is a critical decision and it involves balancing various factors including security & compliance, cost efficiency, scalability and business strategy etc.

Security and Compliance

- Assess various security requirements and the sensitivity of tenant data based on regulatory requirements like data isolation, data retention, access control, and other relevant factors.

Cost Efficiency

- Compare infrastructure and operational costs between shared and dedicated resources.

Scalability & Performance

- Evaluate the ability to grow in terms of the number of tenants and data volume, ensuring the model can accommodate future growth without significant redesign.

- Consider the impact of resource contention in shared environments and ensure the model can maintain acceptable performance levels for all tenants. (eg: noise from one tenant impacting the other tenants.)

Customization and Flexibility

- Determine the level of customization required by tenants and whether different configurations or features are necessary.

Management Complexity

- Assess the operational overhead involved in management and maintenance of each model.

Business Strategy

- Align the multi-tenant architecture with overall business objectives and customer needs to enhance market differentiation and competitive advantage.

- Choose a model that can adapt to changing business requirements and technological advancements.

Reference Architecture using AWS Services

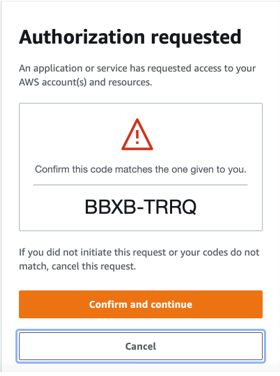

AWS Cognito User Pools play a crucial role in managing user authentication and identity, providing a secure and tailored experience for each tenant. This includes features such as user sign-up, sign-in, and multi-factor authentication. Additionally, Cognito supports customizable user attributes, allowing the application to store and retrieve tenant-specific information easily. After users authenticate, Cognito issues JSON Web Tokens (JWTs) that carry essential user identity and authorization details, enabling the application to validate requests and enforce security policies effectively.

Once authenticated, user requests are directed through the AWS ALB, which efficiently distributes incoming traffic to the relevant services hosted on AWS EKS. EKS orchestrates containerized applications and leverages namespaces to create isolated environments for different tenants, ensuring resource separation and enhanced security.

For data management, AWS RDS acts as the relational database layer, providing flexibility with options for either shared databases with logical separation or dedicated databases for greater isolation, depending on tenant needs.

Summary

Selecting the right multi-tenant model involves balancing security, cost, scalability, customization, and management complexity. By carefully evaluating these factors against your business objectives, choose a model that aligns best with your needs.